Today, no one is surprised by the fact that this or that company has its own data center. Only a few employees of this organization know what this is, but in reality, such equipment is required by any business that wants to achieve real stability. In other words, if there is a real need to ensure uninterrupted, scalable and managed operation of the company, when the stability of the business directly depends on the IT infrastructure, a data center is used.

What is this?

Thus, with the passage of time and the development of information technology, almost any organization that is somehow connected with information has its own data center. What it is? A data processing center, which in professional specialized literature is often called a data center. From the name you can understand that in such equipment various operations are carried out that directly relate to the processing of any information, that is, the creation or generation of data, subsequent archiving and storage of files, as well as their subsequent provision at the user’s request. At the same time, special attention should be paid to the fact that in addition to the above functions, there is also secure data destruction, for which the data center is responsible. What it is? Deleting certain files without harming other data and, possibly, without the possibility of recovery, if really important information is deleted that should not fall into third hands.

Where can they be used?

Today, there are quite a large number of funds, including land registries, the Pension Fund and various libraries, which are directly involved in collecting and storing various information. It is worth noting that there is also information that is generated by business in itself, for example, that which is used by various help services. There is also information that does not take any part in business processes, but is necessary for their implementation. Such data includes files of personnel services, as well as databases of user accounts in various information systems.

Industrial holdings create specialized electronic archives to perform calculation tasks, store documents, and automate business processes. Thus, different organizations use different types of information, as well as the tasks that relate to its processing. It is to solve such problems that a data center is created. What it is, only the system administrator knows, on whose shoulders the equipment falls.

When is the data center used?

Problems related to this were solved at different times using various technical means. In the twentieth century, electronic computing devices became the basis of modern business, as they took on the vast majority of computing tasks, and the emergence of devices that store information provided the opportunity to completely get rid of paper archives, replacing them with more compact and yet accessible electronic ones. and tape media. Already in order to accommodate the first electronic computers, it was necessary to allocate specialized computer rooms in which the required climatic conditions were maintained so that the equipment did not overheat during operation and at the same time worked stably.

Server rooms and their features

With the beginning of the era of development of personal computers and small servers, the computing equipment of almost any company began to be located in special server rooms. In the vast majority of cases, under such a room there is a certain room in which a household air conditioner is installed, as well as an uninterruptible power supply in order to ensure the continuous operation of the equipment in normal condition. However, these days, this option is only suitable for those enterprises in which business processes are very dependent on the information used and the available computing resources.

What is the difference between a data center and a server room?

By and large, a modern data center is an expanded copy of a traditional server room, because in fact they have a lot in common - the use of engineering systems that support the continuous operation of equipment, the need to provide the required microclimate, as well as an appropriate level of security. But at the same time, there are a number of differences that are decisive.

The data processing center is equipped with a full set of various engineering systems, as well as specialized components that ensure the normal and stable operation of the company’s information infrastructure in the mode required for business operation.

Where is this equipment used?

In Russia, data processing using such centers has become in demand since 2000, when various banking institutions, government agencies, and enterprises in the oil industry began to order such equipment. It is worth noting that the data center first appeared back in 1999, when it began to be used to process declarations and income certificates for all residents of Moscow and the region.

Also, one of the first large data centers was the equipment that was used in the center of Sberbank. In 2003, with the support of Rostelecom, the first republican data processing center was organized in Chuvashia, used to systematize archival data. Such devices were provided by various local authorities, and in 2006 a center was also opened in which data from the Kurchatov Institute center was processed. The following year, VTB-24 and Yandex also began using their own data center. Moscow, therefore, quickly came to the use of such equipment, as did other large cities in Russia.

Where should you install a data center?

Nowadays, almost every large geographically distributed company uses its own data center, especially if the business is very dependent on the IT organization. Examples include telecom operators, retail companies, travel and transport companies, medical institutions, industrial holdings and much more.

A data center can be dedicated to the operation of a specific enterprise or used as multi-user equipment. A multi-tenant data center provides a wide range of services, including business continuity, as well as hosting, server rental and hosting, and many other elements. Data center services are the most relevant for small and medium-sized businesses, since with its help you can eliminate the need to modernize the IT infrastructure, and ultimately receive a guarantee of reliability and service of the highest quality.

The key to a successful data center is competent design

Proper design of a data center allows you to eliminate the occurrence of serious problems during the operation of equipment, as well as reduce costs during operation. In general, the structure of such a center is divided into four main elements - engineering infrastructure, building, software and specialized equipment. At the same time, the construction of buildings and premises for the installation of such equipment is carried out in a variety of standards, the main purpose of which is to ensure safety and reliability. In Western countries, extremely serious and sometimes very unique requirements for data centers are often established - in particular, it is worth highlighting that the building must be located at least 90 meters from the highest point to which water reached during a flood over the past 100 years, which achieving this is far from being as easy as it might seem at first glance.

We took a non-standard approach to writing this material about data centers. On the one hand, the article is of an informational and educational nature, and on the other hand, we will use a real Moscow one as an example - TEL Hosting.

A Data Processing Center (DPC) is a high-tech facility for housing computing equipment. Initially, data centers were used mainly for the own needs of enterprises and organizations. Recently, this term has become widespread in the commercial sphere, due to increasing interest in private data center services and demand for them among clients.

Data center services

Data processing centers offer their clients a whole range of telecommunications services related to information storage and processing. In addition to standard solutions, some DCs offer additional services.

Standard data center services:

Additional data center services:

- Backup

- Cloud solutions

- Administered server

- Remote Desktop

Technologies used in data centers

A highly developed technical infrastructure that allows maintaining optimal conditions for client equipment is a key characteristic for a modern data center. The TEL data center is a clear example of such a facility.

A detailed story about the technical component of our data center (from which you can get a general idea of the data center) is posted on the corporate blog on Habré.

Currently, there are more than 80 commercial data centers operating in Moscow. High competition and proximity to the channels of mainline operators make Moscow prices the most competitive on the Russian market. In Russian regions, prices for data center services are several times higher than in Moscow.

Data Center Differences

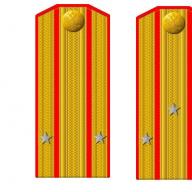

TIA-942 standard

According to this standard, all DCs receive a certain level - from tier1 to tier4.

The TEL data center formally complies with the tier2+ standard.

Format

According to this criterion, the following are distinguished:

A) autonomous data centers

b) data centers renting space from larger providers

c) server rooms, usually used for the needs of a specific enterprise

d) “trash” data centers (without proper infrastructure).

The TEL Hosting data center is owned by the telecommunications company TEL and belongs to the first type of facilities.

/ Data center: build or rent?

In contact with

Classmates

Mikhail Polyakov, Deputy General Director of INSYSTEMS (LANIT group of companies)

Data center: build or rent?

Discussions about what is more profitable - your own data center or renting server equipment have not subsided, probably, since the advent of the first data center, or at least from the moment when this concept arose.

As in any dispute, both sides cannot be objective: data center operators will almost certainly convince of the need to rent, and companies specializing in the construction of data centers and their infrastructure will tell about the advantages and necessity of their own data centers

But the answer to the question of what to do, build your own data center or rent server equipment, can only be found by analyzing a number of such important factors as the level of capital and operating costs, the degree of development and availability of data center services, IT services and information security systems. In addition, the choice of services from your own or leased data center is determined by a number of obvious factors. Let's take a closer look at the most important ones.

Business direction and scale

The larger the company, the more likely it is that it will require its own data center. Thus, an industrial company with state participation that has its own research and development activities, for example, in the interests of national defense, needs to conduct high-performance computing, requires reliable storage of large amounts of data and other IT services. Will it entrust these functions to a public data center service operator? Most likely not, and even if she tried, she wouldn't be allowed to do it. The only way out in this case is to create your own data center.

Due to the exceptional importance of ensuring a high level of information security and the huge costs that arise from its leaks and loss, banks and large financial institutions will take this route. However, it can be assumed that in this sector, as Internet banking develops, a transition will begin from the construction of its own data centers to the rental of services.

But even if a company decides to rent a data center, someone must first build it. Therefore, data center service provider companies will also build their own data centers. As the market develops, data center operators will begin to offer increasingly higher levels of IT services. This transition will be built primarily on the basis of our own resources. Hypothetically, data center operators can resort to leasing capacity, but only in cases where it is necessary to offer services to the customer very quickly, and their own resources are not enough.

Renting data center services is most suitable for companies offering Internet services (portals, search engines, online stores, social networks, etc.). Not to mention the fact that building your own data center, for example, for an online store, is absolutely pointless.

Geographical location of the region, level of development and availability of IT services. Territorial remoteness from main highways, industrial centers and transport hubs, lack of necessary infrastructure and communication channels undoubtedly influence the choice between your own data center and rent. However, as communication channels develop and the availability of remote data center services increases, this will be leveled out.

Prospects and dynamics of business development, economic conditions. Obviously, the choice of solution is seriously influenced by business dynamics and the general economic situation. Many companies decide to build their own data center when they are at the growth stage. Moreover, it is usually assumed that it will be used not only to solve its own problems, but also to rent out free resources. Data center operators are also acting in a growing market, investing in capacity development.

During a recession, everyone, without exception, begins to think about optimizing IT budgets and, accordingly, renting data center services. In this sense, rent, being a very flexible instrument, opens up enormous optimization opportunities. In addition, data center operators, expanding their business, can offer additional services, for example, communication services for tenants, dedicated hermetic zones (modules), and information security services.

Every business or company can find the right answer to the question about renting or owning a data center only by taking into account many factors and only for a certain time interval, and the dynamic market for IT services is constantly changing the conditions for making one decision or another. Today, all circumstances may clearly indicate the need to build your own data center, but tomorrow they will suggest the opposite decision. In such a situation, some operators will build their own data centers, while others will rent their services. As cloud technologies develop, the segmentation and specialization of data center service operators by type and level of services provided will deepen.

Now you should think about renting data center services. In connection with the development of virtualization and cloud technologies, renting server equipment (dedicated server) and placing your own servers in provided racks (colocation) are only part of the services traditionally provided by data centers. In addition to those listed, data centers provide IaaS (Infrastructure as a Service), SaaS (Software as a Service) and PaaS (Platform as a Service) services.

Recently, the development of cloud technologies has even given rise to such a concept as XaaS (Anything as a Service), that is, the ability to provide any services via the Internet. All this not only expands the list of consumers of data center services, but also creates new types of providers of these services. In turn, higher-level data center service providers are simultaneously consumers of lower-level services. For example, a SaaS provider may be a consumer of IaaS, colocation or dedicated server. An important subjective point that influences the choice of a decision to own a data center or lease may be the consumer’s awareness of the services that are offered to him.

Now the vast majority already understand what it is to rent a server or rack, when the meaning and advantages of other services become just as familiar and understandable, the number of tenants will increase noticeably. BIT

Growth amid instability

In 2014, the Russian data center market grew by almost 30% and amounted to 11.9 billion rubles. (9.3 billion in 2013).

Such data are provided in the study by iKS-Consulting “Russian market of commercial data centers 2014-2018”, published in December 2014. One of the key factors in market growth was the introduction of new data center capacities in the Russian Federation. In 2014, the area of computer rooms increased by 17.5 thousand sq.m, and the number of racks - by 3.5 thousand. Thus, at the end of 2014, the total area of computer rooms in commercial data centers in the Russian Federation will reach 86 thousand sq.m. (growth of 36.5%), racks – 25.5 thousand (growth of 28%) (see Fig. 1).

However, market experts note a slowdown in the growth of the Russian data center market due to the unstable economic situation. Among the reasons that influence the development of this market are an increase in the cost of some imported equipment for data centers, changes in credit conditions during their construction or development, and uncertainty arising in connection with the imposition of sanctions by the United States and the European Union.

According to the annual report “Russian Data Center Market 2014” by RBC.Research, profitability from data center services in 2014 increased for 60% of companies, decreased for 10%, and remained at the same level for 30% of companies, while in 2013 year, an increase in the profitability indicator was noted by 71% of market players.

An increase in the energy efficiency of data centers by more than 5% in 2013 was noted by 71% of surveyed companies, in 2014 – already 78%. For the rest of the study participants, this indicator remained at the same level.

The minimum cost limit for data center services in 2014 increased for 11% of study participants. At the same time, for 78% of companies this indicator remained at the level of last year.

- Do you have a data center?

- Yes, we are building for 100 racks.

- And we are building for 200.

- And we are at 400 with an independent gas power plant.

- And we have 500 with water cooling and the ability to remove up to 10 kW of heat from one rack.

- And we watch the market and are surprised.

The situation on the Moscow (and Russian in general) data center market looked deplorable two years ago. The total shortage of data centers as such and space in existing centers led to the fact that a 3-4 kW rack, which cost in 2004-2005, about 700 USD, in 2007 it began to cost 1500-2000 USD. In an effort to meet growing demand, many operators and system integrators have launched “builds of the century” to create the best and largest data centers. These wonderful aspirations have led to the fact that at the moment there are about 10 data centers in Moscow at the stage of opening and initial filling, and several more are in the project. The companies Telenet, i-Teco, Dataline, Hosterov, Agava, Masterhost, Oversun, Synterra and a number of others opened their own data centers at the turn of 2008 and 2009.

The desire to invest money in large-scale telecommunications projects was explained not only by fashion, but also by a number of economic reasons. For many companies, building their own data center was a forced measure: the incentive for this, in particular for hosting providers or large Internet resources, was the constantly growing costs of infrastructure. However, not all companies have calculated their strengths correctly; for example, the data center of one large hosting provider has turned into a long-term construction project that has been underway for two years. Another hosting provider, which built a data center outside the Moscow Ring Road, has been trying to sell it to at least some large clients for six months now.

Investment projects launched at the height of the crisis also cannot boast of a stable influx of clients. Quite often the cost per rack, included in the business plan at the level of 2000 USD, in the current economic conditions is reduced to 1500-1400 USD, postponing the project’s achievement of self-sufficiency for years.

Several grandiose projects for the construction of data centers with thousands of racks with gas power plants outside the Moscow Ring Road remained unrealized. One of these projects, in particular, was “buried” by a fixed-line operator (due to the company’s takeover by a larger player).

Thus, to date, only those data centers that were built more than three years ago and which were filled during the shortage years of 2004-2007 have paid off. In a crisis - in conditions of an excess of free space in data centers - the construction of more and more new data centers, it would seem, looks like sheer madness.

However, not everything is so bad: even in a crisis, subject to certain conditions, you can and should create your own data center. The main thing is to understand a number of nuances.

What makes a company create its own data center?

There is only one motive - business security and risk minimization. These are the risks. Firstly, the class of commercial data centers in Moscow corresponds to level 1-2, which means permanent problems with power supply and cooling. Secondly, commercial data centers categorically do not agree to cover losses from downtime and lost profits. Check the maximum amount of fine or penalty that you can expect in case of downtime - it usually does not exceed 1/30 of the rent per day of downtime.

And thirdly, you are not able to control the real state of affairs in a commercial data center:

- this is a commercial organization that must make a profit from its activities and sometimes saves even at the expense of the quality of its services;

- you assume all the risks of a third-party company, for example - power outage (even short-term) for an outstanding debt;

- the commercial data center may terminate its contract with you at any time.

Data center economics

It is very important to estimate the costs of construction and operation in advance, correctly and completely, and for this you need to determine the class of the data center that you will build. Below is the estimated cost of building a turnkey site for a 5 kW rack (excluding the cost of electricity).

| Level 1 | From 620 TR |

| Level 2 | From 810 TR |

| Level 3 | From 1300 t.r. |

| Level 4 | From 1800 TR |

The cost of operating a data center depends on many factors. Let's list the main ones.

- Cost of electricity = amount of electricity consumed + 30% for heat removal + transmission and conversion losses (from 2 to 8%). And this is subject to the implementation of all cost-cutting measures - such as reducing losses and proportional cooling (which in some cases, alas, is impossible).

- The cost of renting premises is from 10 thousand rubles per sq. m. m.

- The cost of servicing air conditioning systems is approximately 15-20% of the cost of the air conditioning system per year.

- The cost of servicing power systems (UPS, diesel generator set) is from 5 to 9% of the cost.

- Rental of communication channels.

- Payroll of the maintenance service.

What does a data center consist of?

There are a number of formalized requirements and standards that must be met when constructing a data center: after all, the reliability of its operation is critically important.

Currently, the international classification of levels (from 1 to 4) of data center readiness (reliability) is widely used () see. table). Each level assumes a certain degree of availability of data center services, which is ensured by different approaches to redundant power, cooling and channel infrastructure. The lowest (first) level assumes availability of 99.671% of the time per year (or the possibility of 28 hours of downtime), and the highest (fourth) level implies availability of 99.995%, i.e. no more than 25 minutes of downtime per year.

Data center reliability level parameters

| Level 1 | Level 2 | Level 3 | Level 4 | ||||

| Ways of cooling and input of electricity | One | One | One active and one standby | Two active | |||

| Component Redundancy | N | N+1 | N+1 | 2*(N+1) | |||

| Division into several autonomous blocks | No | No | No | Yes | |||

| Hot-swappable | No | No | Yes | Yes | |||

| Building | Part or floor | Part or floor | Freestanding | Freestanding | |||

| Staff | No | At least one engineer per shift | At least two engineers per shift | More than two engineers, 24-hour duty | 100 | 100 | 90 | 90 |

| Auxiliary areas, % | 20 | 30 | 80-90 | 100+ | |||

| Raised floor height, cm | 40 | 60 | 100-120 | 600 | 800 | 1200 | 1200 |

| Electricity | 208-480 V | 208-480 V | 12-15 kV | 12-15 kV | |||

| Number of points of failure | Many + operator errors | Many + operator errors | Few + operator errors | No + operator errors | |||

| Allowable downtime per year, h | 28,8 | 22 | 1,6 | 0,4 | |||

| Time to create infrastructure, months. | 3 | 3-6 | 15-20 | 15-20 | |||

| Year of creation of the first data center of this class | 1965 | 1975 | 1980 | 1995 |

This classification by levels was proposed by Ken Brill back in the 1990s. It is believed that the first data center of the highest level of availability was built in 1995 by IBM for UPS as part of the Windward project.

In the USA and Europe, there is a certain set of requirements and standards governing the construction of data centers. For example, the American standard TIA-942 and its European analogue -EN 50173−5 fix the following requirements:

- to the location of the data center and its structure;

- to cable infrastructure;

- to reliability, specified by infrastructure levels;

- to the external environment.

In Russia, at the moment, no current requirements for the organization of data centers have been developed, so we can assume that they are set by standards for the construction of computer rooms and SNiPs for the construction of technological premises, most of the provisions of which were written back in the late 1980s.

So, let’s focus on the three “pillars” of data center construction, which are the most significant.

Nutrition

How to build a reliable and stable power supply system, avoid future operational failures and prevent equipment downtime? The task is not simple, requiring careful and scrupulous study.

The main mistakes are usually made at the design stage of data center power supply systems. They (in the future) can cause a failure of the power supply system for the following reasons:

- overload of power lines, as a result - failure of electrical equipment and sanctions from energy regulatory authorities for exceeding the consumption limit;

- serious energy losses, which reduces the economic efficiency of the data center;

- limitations in the scalability and flexibility of power supply systems associated with the load capacity of power lines and electrical equipment.

The power supply system in the data center must meet the modern needs of technical sites. In the data center classification proposed by Ken Brill, in relation to power supply, these requirements would look like this:

- Level 1 - it is enough to provide protection against current surges and voltage stabilization, this can be solved by installing filters (without installing a UPS);

- Level 2 - requires installation of a UPS with bypass with N+1 redundancy;

- Level 3 - parallel operating UPSs with N+1 redundancy are required;

- Level 4 - UPS systems, with redundancy 2 (N+1).

Today on the market you can most often find data centers with a second-level power supply, less often - a third (but in this case, the cost of placement usually increases sharply, and is not always justified).

According to our estimates, a data center with full redundancy usually costs 2.5 times more than a simple data center, so it is extremely important to decide at the pre-project level what category the site should correspond to. Both underestimation and overestimation of the importance of the permissible downtime parameter equally negatively affect the company’s budget. After all, financial losses are possible in both cases - either due to downtime and failures in the operation of critical systems, or due to throwing money away.

It is also very important to monitor how electricity consumption will be recorded.

Cooling

Properly organized heat removal is an equally complex and important task. Very often, the total heat release of the room is taken and, based on this, the power of demanding air conditioners and their number are calculated. This approach, although very common, cannot be called correct, since it leads to additional costs and losses in the efficiency of cooling systems. Errors in the calculations of cooling systems are the most common, and evidence of this is the operation service of almost every data center in Moscow, watering heat exchangers with fire hoses on a hot summer day.

The quality of heat removal is affected by the following points.

Architectural features of the building. Unfortunately, not all buildings have a regular rectangular shape with constant ceiling heights. Height changes, walls and partitions, structural features and exposure to solar radiation can all lead to additional difficulties in cooling certain areas of the data center. Therefore, the calculation of cooling systems should be carried out taking into account the characteristics of the room.

Height of ceilings and raised floors. Everything is very simple here: if the height of the raised floor does not spoil the matter, then too high ceilings lead to stagnation of hot air (therefore, it must be removed by additional means), and too low ceilings impede the movement of hot air to the air conditioner. In the case of a low raised floor (> 500 mm), the cooling efficiency drops sharply.

Indicators of temperature and humidity in the data center. As a rule, for normal operation of the equipment, it is necessary to maintain a temperature regime in the range of 18-25 degrees Celsius and a relative humidity of 45 to 60%. In this case, you will protect your equipment from stopping due to hypothermia, failure due to condensation in high humidity, static electricity (in the case of low humidity) or due to overheating.

Channels of connection

It would seem that such an “insignificant” component as communication channels cannot cause any difficulties. That is why neither those who rent a data center nor those who build it pay enough attention to it. But what is the use of flawless and uninterrupted operation of the data center if the equipment is unavailable, i.e. doesn't it actually exist? Be sure to note: fiber optic lines must be completely duplicated, and many times. By “duplicate” we mean not only the presence of two fiber optic cables from different operators, but also the fact that they should not lie in the same manifold.

It is important to understand that a truly developed communications infrastructure requires significant one-time costs and is by no means cheap to operate. It should be regarded as one of the very tangible components of the cost of a data center.

Own data center “one-two-three”

Here it’s worth making a small digression and talking about an alternative method for creating your own data center. We are talking about BlackBox - a mobile data center built into a transport container. Simply put, BlackBox is a data center located not in a separate room indoors, but in a kind of trailer (see picture).

BlackBox can be brought into full working order in a month, i.e. 6-8 times faster than traditional data centers. At the same time, there is no need to adapt the infrastructure of the company building for BlackBox (to create a special fire safety, cooling, security system, etc.) for it. And most importantly, it does not require a separate room (you can place it on the roof, in the yard...). All that is really needed is to organize a water supply for cooling, an uninterruptible power supply system and an Internet connection.

The cost of the BlackBox itself is about half a million dollars. And here it should be noted that BlackBox is a fully configured (but with the possibility of customization) virtualization data center located in a standard transport container.

Two companies have already received this container for preliminary testing. These are the Linear Accelerator Center (Stanford, USA) and... the Russian company Mobile TeleSystems. The most interesting thing is that MTS launched BlackBox faster than the Americans.

Overall, the BlackBox comes across as a very well thought out and reliable design, although there are of course some shortcomings worth mentioning.

An external power source of at least 300 W is required. Here we come up with the construction or reconstruction of a transformer substation, installation of a main switchboard, and laying of a cable route. It’s not so simple - design work, coordination and approval of the project at all levels, installation of equipment...

UPSs are not included in the delivery package. Again we come up with design work, choosing a supplier, equipping the room for installation of a UPS with an air conditioning system (batteries are very sensitive to temperature conditions).

The purchase and installation of a diesel generator set will also be required. Without it, the problem with redundancy cannot be solved, and this is another round of approvals and permits (the average delivery time for such units is from 6 to 8 months).

Cooling - an external source of cold water is required. You will have to design, order, wait, install and launch a redundant chiller system.

Summary: you will essentially build a small data center within six months, but instead of a hangar (premises), buy a container with servers, balanced and seriously thought out to the smallest detail, with a set of convenient options and software for managing all this equipment, and install it in a month .

The more data centers, the..?

Currently, the largest data center about which there is information in open sources is a Microsoft facility in Ireland, in which the corporation plans to invest more than $500 million. Experts say that this money will be spent on creating the first computer center in Europe to support various network Microsoft services and applications.

Construction of a structure with a total area of 51.09 thousand square meters in Dublin. m, on the territory of which tens of thousands of servers will be located, began in August 2007 and should be completed (according to the company’s own forecasts) in mid-2009.

Unfortunately, the available information about the project gives little information, because it is not the area that is important, but the energy consumption. Based on this parameter, we propose to classify the data center as follows.

- “Home data center” is an enterprise-level data center that requires serious computing power. Power is up to 100 kW, which allows you to place up to 400 servers.

- "Commercial Data Center". This class includes operator data centers, the racks in which are rented. Power - up to 1500 kW. Accommodates up to 6500 servers.

- "Internet Data Center" - a data center for an Internet company. Power - from 1.5 MW, accommodates 6,500 servers or more.

I will take the liberty of suggesting that when building a data center with a capacity of more than 15 MW, an “economy of scale” will inevitably arise. An error of 1.5-2 kW in a 40 kW “family” data center will most likely go unnoticed. A megawatt-sized mistake would be fatal to a business.

In addition, one can reasonably assume in this situation that the law of diminishing returns is at work (as a consequence of economies of scale). It manifests itself in the need to combine large areas, enormous electrical power, proximity to main transport routes and laying railway tracks (this will be more economically feasible than delivering a huge amount of cargo by road). The cost of developing all this in terms of 1 rack or unit will be seriously above average for the following reasons: firstly, the lack of supplied power of 10 MW or more at one point (such a “diamond” will have to be artificially grown); secondly, the need to build a building or a group of buildings sufficient to accommodate a data center.

But if you suddenly managed to find a power of, say, about 5 MW (and this is already a lot of luck), with two redundant inputs from different substations into a building that has a regular rectangular shape with a ceiling height of 5 m and a total area of 3.5 thousand square meters. m, and there are no height differences, walls or partitions, and there is about 500 sq. m. m of adjacent territory... Then, of course, it is possible to achieve the minimum cost per rack, of which there will be approximately 650.

The figures here are based on a consumption of 5 kW per rack, which is the de facto standard today, since an increase in consumption in the rack will inevitably lead to difficulties with heat removal, and as a result, to a serious increase in the cost of the solution. Likewise, reducing consumption will not bring the necessary savings, but will only increase the rental component and require the development of larger areas than is actually required (which will also have a detrimental effect on the project budget).

But we must not forget that the main thing is the compliance of the data center with the assigned tasks. In each individual case, it is necessary to seek a balance, based on the input data that we have. In other words, you will have to find a compromise between the distance from the main highways and the availability of free electrical power, ceiling height and room area, full redundancy and the project budget.

Where to build?

It is believed that the data center should be located in a separate building, usually without windows, equipped with the most modern video surveillance and access control systems. The building must have two independent electrical inputs (from two different substations). If the data center has several floors, then the floors must withstand high loads (from 1000 kg per sq. m). The internal part should be divided into sealed compartments with their own microclimate (temperature 18-25 degrees Celsius and humidity 45-60%). Cooling of server equipment should be provided using precision air conditioning systems, and power backup should be provided by both uninterruptible power supply devices and diesel generator sets, which are usually located next to the building and ensure the functioning of all electrical systems of the data center in the event of an emergency.

Particular attention should be paid to the automatic fire extinguishing system, which must, on the one hand, exclude false alarms, and on the other, respond to the slightest signs of smoke or the appearance of an open flame. A serious step forward in the field of data center fire safety is the use of nitrogen fire extinguishing systems and the creation of a fire-safe atmosphere.

The network infrastructure must also provide maximum redundancy for all critical nodes.

How to save?

You can start saving with the quality of power in the data center and end with savings on finishing materials. But if you are building such a “budget” data center, it looks at least strange. What's the point of investing in a risky project and jeopardizing the core of your business?! In a word, saving requires a very balanced approach.

Nevertheless, there are several very costly budget items that are not only possible, but also necessary to optimize.

1. Telecommunication cabinets (racks). Installing cabinets in the data center, i.e. racks, where each has side walls plus a back and front door, does not make any sense for three reasons:

- the cost may differ by an order of magnitude;

- the load on the floors is higher on average by 25-30%;

- cooling capacity is lower (even taking into account the installation of perforated doors).

2. SCS. Again, there is no point in entangling the entire data center with optical patch cords and purchasing the most expensive and powerful switches if you do not intend to install equipment in all racks at once. The average occupancy period for a commercial data center is one and a half to two years. At the current pace of development of microelectronics, this is a whole era. And the entire wiring will have to be redone one way or another - either you will not calculate the required volume of ports, or the communication lines will be damaged during operation.

Under no circumstances build a “copper” cross-connection in one place - you will go broke on the cable. It is much cheaper and smarter to install a telecommunications rack next to each row and install 1-2 copper patch panels from it to each rack. If you need to connect a rack, then throwing an optical patch cord to the desired row is a matter of minutes. At the same time, serious investments will not be needed at the initial stage; Finally, the necessary scalability will be ensured along the way.

3. Nutrition. Yes, you won’t believe it, but the most important thing in data center power supply is efficiency. Choose electrical equipment and uninterruptible power supply systems carefully! From 5 to 12% of the cost of data center ownership can be saved by minimizing losses, such as conversion losses (2-8%) in the UPS (older generations of UPS have lower efficiency) and losses when smoothing harmonic distortions with a harmonic filter (4-8%). Losses can be reduced by installing “reactive power compensators” and by reducing the length of the power cable route.

Conclusion

What conclusions can be drawn? How to choose from all the variety the solution that suits you? This is certainly a complex and non-trivial question. Let us repeat: in each specific case it is necessary to carefully weigh all the pros and cons, avoiding meaningless compromises - learn to correctly assess your own risks.

On the one hand, when reducing costs, outsourcing IT services can be one of the saving options. In this case, it is optimal to use commercial data centers, obtaining a full range of telecommunications services without investing in construction. However, for large specialized companies and the banking sector, with the onset of a period of instability and freezing of the construction of commercial data centers, the question of building their own data center becomes acute...

On the other hand, the crisis phenomena that we have observed over the past year have sadly affected the economy as a whole, but at the same time serve as an accelerator for the development of more successful companies. Rents for premises have dropped significantly. The decline in the hype around electric capacity has made room for more efficient consumers. Equipment manufacturers are ready for unprecedented discounts, and labor prices have fallen by more than a third.

In a word, if you were planning to build your own data center, now, in our opinion, is the time.

The era of computers is already more than 50 years old, and accordingly, their life support infrastructure is just as old. The first computer systems were very difficult to use and maintain, they required a special integrated infrastructure to function.

An incredible variety of cables connected various subsystems, and for their organization many technical solutions were developed that are still used today: equipment racks, raised floors, cable trays, etc. In addition, cooling systems were required to prevent overheating. And since the first computers were military, security issues and restrictions came first. Subsequently, computers became smaller, cheaper, more unpretentious and penetrated into a variety of industries. At the same time, the need for infrastructure disappeared, and computers began to be placed anywhere.

The revolution occurred in the 90s, after the spread of the client-server model. Those computers that began to be considered servers began to be placed in separate rooms with a prepared infrastructure. The names of these rooms in English sounded like Computer room, Server room, Data Center, while in the Soviet Union we called them “Computer rooms” or “Computing Centers”. After the collapse of the USSR and the popularization of English terminology, our computer centers turned into “server” and “Data Processing Centers” (DPC). Are there fundamental differences between these concepts, or is it just a matter of terminology?

The first thing that comes to mind is the scale: if it’s small, then it’s a server one, and if it’s large, then it’s a data center. Or: if there are only its own servers inside, then this is a server room; and if server hosting services are provided to third-party companies, then a data center. Is it so? For the answer, let's turn to the standards.

Standards and criteria

The most common standard currently describing the design of data centers is the American TIA 942. Unfortunately, there is no Russian analogue; the Soviet CH 512-78 is long ago and hopelessly outdated (even though there was an edition from 2000), it can only be considered from the point of view of general approaches.

The TIA 942 standard itself states that the purpose of its creation is to formulate requirements and guidelines for the design and installation of a data center or computer room. Let's assume that the data center is something that meets the requirements of TIA 942, and the server room is just some kind of room with servers.

So, the TIA 942 standard classifies 4 levels (TIERs) of data centers and names a number of parameters by which this classification can be carried out. As an example, I decided to check whether my server room, built along with the plant three years ago, is a real data center.

As a small digression, I’ll point out that the plant produces stamped parts for the automotive industry. We produce body parts for companies such as Ford and GM. The enterprise itself is small (total staff of about 150 people), but with a very high level of automation: the number of robots is comparable to the number of workers in the workshop. The main difference between our production can be called the Just-In-Time work rhythm, that is, we cannot afford delays, including due to the fault of IT systems. IT is business critical.

The server room was designed to meet the needs of the plant; it was not intended to provide services to third-party companies; therefore, certification for compliance with any standards was not required. However, since our plant is a member of a large international holding, design and construction were carried out taking into account internal corporate standards. And these standards are, at least partially, based on international ones.

The TIA 942 standard is very extensive and describes in detail approaches to the design and construction of data centers. In addition, in the appendix there is a large table with more than two hundred parameters for compliance with the four data center levels. Naturally, it is not practical to consider all of them in the context of this topic, and some of them, for example, “Separate parking for visitors and employees,” “Thickness of the concrete slab at ground level,” and “Proximity to airports,” are not very directly related to the classification Data centers and especially their difference from a server room. Therefore, we will consider only the most important, in my opinion, parameters.

Basic parameters for data center classification

The standard establishes criteria for two categories - mandatory and recommended. Mandatory ones are indicated by the word “shall”, recommended ones - by the words “should”, “may”, “desirable” (should, may, desirable).

The first and most important criterion is the level of operational readiness. According to TIA 942, a data center of the highest - fourth - level must have 99.995% availability (i.e. no more than 15 minutes of downtime per year). Further, descending, 99.982%, 99.749% and 99.671% for the first level, which already corresponds to 28 hours of downtime per year. The criteria are quite strict, but what does data center availability look like? Here, only downtime of the entire data center due to the fault of one of the life support systems is considered, and the downtime of individual servers does not affect the operational readiness of the data center. And if so, then the most likely reason for the failure is rightly considered to be interruptions in the power supply system.

Our server room has a powerful APC UPS with N+1 redundancy and an additional battery cabinet, which is capable of maintaining the operation of not only servers, but also all computers in the enterprise for up to 7 hours (why do we need running servers if there is no one to connect to them). Over three years of operation there have never been any failures, so according to this parameter we can claim the highest TIER 4.

Speaking of power supply, the third and fourth classes of data centers require a second power input. We don't have one, so the maximum is second class. The standard also classifies power consumption per square meter of area. Strange parameter, never thought about it. I measured it: I have 6 kW per 20 square meters, that is, 300 W per square meter (only the first level). Although it is possible that I think incorrectly: the standard states that a good data center must have free space for scaling. That is, it turns out that the greater the “scaling margin”, the lower the level of the data center, but it should be the other way around. Here we have the lowest rating, but we still meet the standard.

For me, an important parameter is the connection point for external telecommunication systems. We interact online with clients to receive orders and ship components; therefore, a lack of communication can lead to a stop in our clients’ conveyor belts. And this will not only negatively affect our reputation, but will also lead to serious fines. It’s interesting that the standard itself talks about duplicating communication input points, but the appendix says nothing about this (although it states that at levels above the first, all subsystems must be redundant). We use two connection channels with automatic routing in case of failure in one of them, plus a backup GPRS router with manual connection. Here again we meet the highest requirements.

A significant part of the standard is devoted to cable networks and systems. These are distribution points for the main and vertical subsystems of the overall data center cabling system and cabling infrastructure. After reading several parts of this section, I realized that I either need to memorize it or suck it up and concentrate on more important things. Although at a superficial glance (category 6 twisted pair, separation of active equipment from passive), we still comply with the standard. Although I am not sure about such parameters as the distance between the cabinets, the bending angles of the trays and the correct spacing of the routes for low-current cables, optics and power. We will assume that here we partially meet the requirements.

Air conditioning systems: there are air conditioners, there is redundancy, we can say that there is even a cold and hot corridor (though there is only one, due to the size of the room.) But the cooling is not distributed under the false floor, as recommended, but directly in the work area. Well, we don’t control humidity, but according to the standard, this is an omission. We set a partial match.

A separate part is devoted to raised floors. The standard regulates both the height and the load on them. Moreover, the higher the class of the data center, the higher and more powerful the false floors should be. We have them, and in terms of height and loads they correspond to the second class of data centers. But my opinion is that the presence of false floors should not be a criterion, much less a characteristic of a data center. I was in the data center of the WestCall company, where they initially abandoned false floors, placing all the trays under the ceiling. Air conditioning is done with cold and hot aisles. The building is separate, the premises are large, and specific services are provided. That is, a good, “real” data center, but it turns out that without false floors it formally does not meet the standard.

The next important point is the security system. Large data centers are guarded almost like safe deposit boxes in a bank, and getting there is a whole procedure, starting from approval at different levels and ending with changing clothes and shoe covers. Ours is simpler, but everything is there: physical security is provided by a private security company, which also guards the plant itself, and the access control system ensures that only authorized employees enter the premises. Let's put a plus sign.

And finally, a gas fire extinguishing system. The main and reserve cylinders, sensors in the room itself, under the floor and above the ceiling and a control system - everything is there. By the way, an interesting point. When companies want to show off their data center, the first thing they show is the fire extinguishing system. Probably because this is the most unusual element of a data center, not found almost anywhere except data centers, and the rest of the equipment simply looks like cabinets of different colors and sizes.

The main difference, in my opinion, between the two upper levels of the data center and the lower ones is that they should be located in a separate building. It would seem that this is the sacred meaning of the difference between a server room and a data center: if it is separated into a separate building, then it is a data center. But no, the standard says that the first two levels are also data centers.

I finally found a parameter for which my server room is not suitable for a data center: the size of the front door. According to the standard, there should be a minimum of 1.0×2.13 m, and preferably 1.2×2.13 m. But we have an ordinary door: 0.9×2.0 m. This is a minus, but it should be considered a criterion for distinguishing a data center from a server room The size of the front door is not serious.

Almost a real data center!

So what have we got? A small server room at a factory meets almost all the requirements of the standard for organizing a data center, albeit with minor reservations. The only major discrepancy is the size of the front door. The absence of a separate building for a server room leaves no chance for top positions. This means that the assumption that the data center is necessarily large, and the server room, on the contrary, is always small, is incorrect. As well as the second assumption that the data center serves many client companies. From everything it follows that a server room is just a synonym for a data center.

The concept of a data center appeared when they began to sell hosting services, renting racks and hosting servers. At that time, the concept of a server room was devalued by a negligent attitude towards infrastructure due to the unpretentiousness of PCs and the low cost of downtime. And, in order to show that the provider has everything built for convenient and trouble-free operation, and they are able to guarantee the quality of the service, they introduced the concept of data centers, and then the standards for their construction. Given the trends of centralization, globalization and virtualization, I think that the concept of a server room will soon disappear or turn into a designation for a telecommunications hub.

I believe our President is counting on approximately the same thing with the police law. The concept of “police” has been devalued, and it is too late to create new rules for them. Whether it will be possible to build competent standards for the new structure - we'll see in the near future.